Open Letter Urges Pause on AI Research

The Future of Life Institute has issued an open letter calling for a six-month pause on some forms of AI research. Citing “profound risks to society and humanity,” the group is asking AI labs to pause research on AI systems more powerful than GPT-4 until more guardrails can be put around them. “AI systems with human-competitive intelligence can pose profound risks to society and humanity,” the Future of Life Institute wrote in its March 22 open letter, which you can read here. “Advanced AI could represent a profound change in the history of life on Earth, and should be planned for and managed with commensurate care and resources. Unfortunately, this level of planning and management is not happening.” Without an AI governance framework – such as the Asilomar AI Principles – in place, we lack the proper checks to ensure that AI develops in a planned and controllable manner, the institute argues. That’s the situation we face today, it says. “Unfortunately, this level of planning and management is not happening, even though recent months have seen AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one–not even their creators–can understand, predict, or reliably control,” the letter states. Absent a voluntary pause by AI researchers, the Future of Life Institute urges government action to prevent harm caused by continued research on large AI models. Top AI researchers were split on whether or not to pause their research. Nearly 1,200 individuals, including Turing Award winner Yoshua Bengio, OpenAI co-founder Elon Musk, and Apple co-founder Steve Wozniak, signed the open letter before a pause on the signature-counting process itself had to be instituted. However, not everybody is convinced a ban on researching AI system more powerful than GPT-4 is in our best interests. “The letter to pause AI training is ludicrous,” Bindu Reddy, the CEO and founder of Abacus.AI, wrote on Twitter. “How would you pause China from doing something like this? The US has a lead with LLM technology, and it’s time to double-down.” “I did not sign this letter,” Yan LeCun, the chief AI scientist for Meta and a Turing Award winner (he won it together with Bengio and Geoffrey Hinton in 2018), said on Twitter. “I disagree with its premise.” LeCun, Bengio, and Hinton, whom the Association of Computing Machinery (ACM) has dubbed the “Fathers of the Deep Learning Revolution,” kicked off the current AI craze more than a decade ago with their research into neural networks. Fast forward 10 years, and deep neural nets are the predominant focus of AI researchers around the world. Following their initial work, AI research was kicked into overdrive with the publication of Google’s Transformer paper in 2017. Soon, researchers were noting unexpected emergent properties of large language models, such as the capability to learn math, chain-of-thought reasoning, and instruction-following. The general public got a taste of what these LLMs can do in late November 2022, when OpenAI released ChatGPT to the world. Since then, the tech world has been consumed with implementing LLMs into everything they do, and the arms race to build ever-bigger and more capable models has gained extra steam, as seen with the release of GPT-4 on March 15. While some AI experts have raised concerns about the downsides of LLMs–including a tendency to lie, the risk of private data disclosure, and potential impact on jobs – it has done nothing to quell the enormous appetite for new AI capabilities in the general public. We may be at an inflection point with AI, as Nvidia CEO Jensen Huang said last week. But the genie would appear to be out of the bottle, and there’s no telling where it will go next. This article originally appeared on sister site Datanami.

The Future of Life Institute has issued an open letter calling for a six-month pause on some forms of AI research. Citing “profound risks to society and humanity,” the group is asking AI labs to pause research on AI systems more powerful than GPT-4 until more guardrails can be put around them.

“AI systems with human-competitive intelligence can pose profound risks to society and humanity,” the Future of Life Institute wrote in its March 22 open letter, which you can read here. “Advanced AI could represent a profound change in the history of life on Earth, and should be planned for and managed with commensurate care and resources. Unfortunately, this level of planning and management is not happening.”

Without an AI governance framework – such as the Asilomar AI Principles – in place, we lack the proper checks to ensure that AI develops in a planned and controllable manner, the institute argues. That’s the situation we face today, it says.

“Unfortunately, this level of planning and management is not happening, even though recent months have seen AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one–not even their creators–can understand, predict, or reliably control,” the letter states.

Absent a voluntary pause by AI researchers, the Future of Life Institute urges government action to prevent harm caused by continued research on large AI models.

Top AI researchers were split on whether or not to pause their research. Nearly 1,200 individuals, including Turing Award winner Yoshua Bengio, OpenAI co-founder Elon Musk, and Apple co-founder Steve Wozniak, signed the open letter before a pause on the signature-counting process itself had to be instituted.

However, not everybody is convinced a ban on researching AI system more powerful than GPT-4 is in our best interests.

“The letter to pause AI training is ludicrous,” Bindu Reddy, the CEO and founder of Abacus.AI, wrote on Twitter. “How would you pause China from doing something like this? The US has a lead with LLM technology, and it’s time to double-down.”

“I did not sign this letter,” Yan LeCun, the chief AI scientist for Meta and a Turing Award winner (he won it together with Bengio and Geoffrey Hinton in 2018), said on Twitter. “I disagree with its premise.”

LeCun, Bengio, and Hinton, whom the Association of Computing Machinery (ACM) has dubbed the “Fathers of the Deep Learning Revolution,” kicked off the current AI craze more than a decade ago with their research into neural networks. Fast forward 10 years, and deep neural nets are the predominant focus of AI researchers around the world.

Following their initial work, AI research was kicked into overdrive with the publication of Google’s Transformer paper in 2017. Soon, researchers were noting unexpected emergent properties of large language models, such as the capability to learn math, chain-of-thought reasoning, and instruction-following.

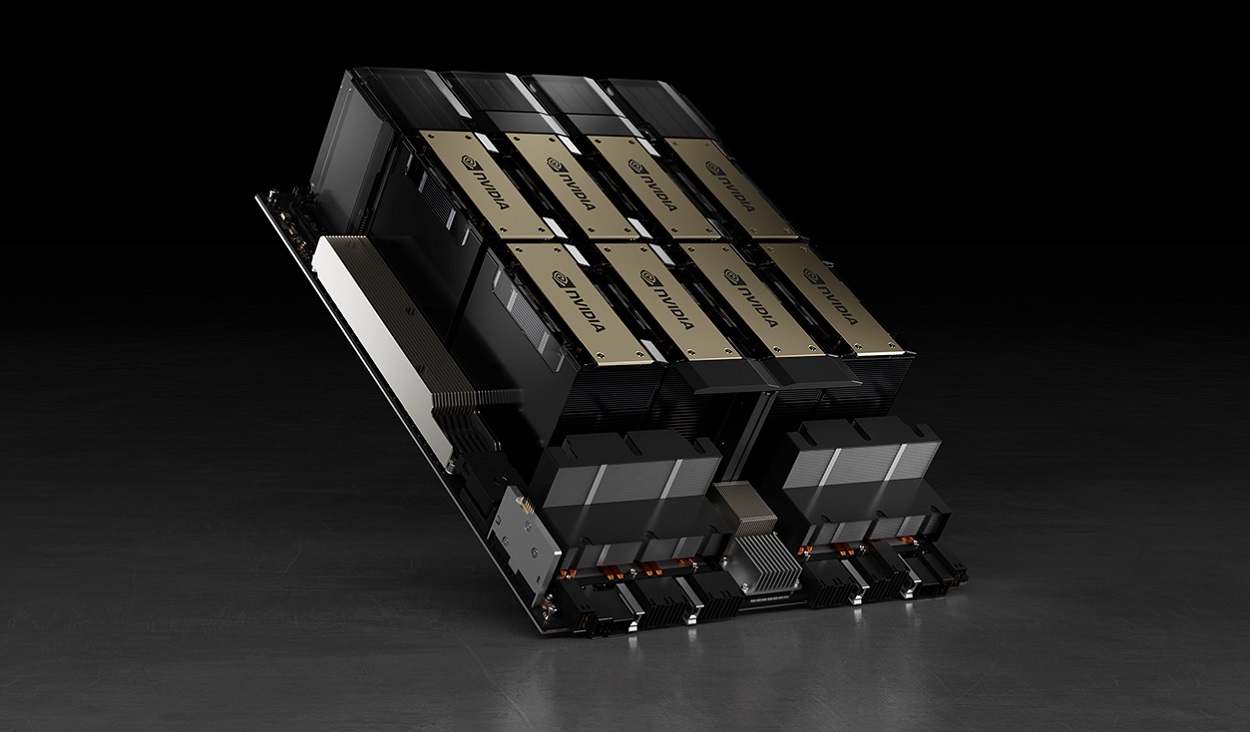

The general public got a taste of what these LLMs can do in late November 2022, when OpenAI released ChatGPT to the world. Since then, the tech world has been consumed with implementing LLMs into everything they do, and the arms race to build ever-bigger and more capable models has gained extra steam, as seen with the release of GPT-4 on March 15.

While some AI experts have raised concerns about the downsides of LLMs–including a tendency to lie, the risk of private data disclosure, and potential impact on jobs – it has done nothing to quell the enormous appetite for new AI capabilities in the general public. We may be at an inflection point with AI, as Nvidia CEO Jensen Huang said last week. But the genie would appear to be out of the bottle, and there’s no telling where it will go next.

This article originally appeared on sister site Datanami.

What's Your Reaction?