Analyzing the AI Landscape: Stanford’s 2023 AI Index Offers a Comprehensive View

The Stanford Institute for Human-Centered Artificial Intelligence (HAI) has released its 2023 Artificial Intelligence Index which analyzes AI’s impact and progress. The data-driven report is a deep dive into topics related to AI such as research, ethics, policy, public opinion, and economics. Key findings of the study include how AI research is expanding with topics including pattern recognition, machine learning, and computer vision. The report notes that the number of AI publications has more than doubled since 2010. Even so, the AI industry is advancing over academia, the report says, citing 32 significant machine learning models produced by industry over just 3 made by academics. The study attributes this to the vast resources needed for training these large models. Traditional AI benchmarks like ImageNet, an image classification benchmark, and SQuAD, a reading comprehension test, are no longer sufficient for measuring progress, leading to new benchmarks like BIG-bench and HELM. Vanessa Parli, HAI associate director and AI Index steering committee member explained in a Stanford article that many AI benchmarks have reached a saturation point where little improvement has been made, and that researchers must develop new benchmarks based on how society wishes to interact with AI. She gives the example of ChatGPT and how it passes many benchmarks but still frequently gives incorrect information. Ethical issues like bias and misinformation were another aspect of AI examined by the report. With the rise of popular generative AI models like DALL-E 2, Stable Diffusion, and of course, ChatGPT, ethical misuse of AI is increasing. The report notes that the number of AI incidents and controversies has increased 26 times since 2012, according to the AIAAIC database, an independent repository for data related to AI misuse. Additionally, interest in AI ethics is rapidly growing, as the study found that the number of submissions to the AI ethics conference FAccT has more than doubled since 2021 while increasing by a factor of 10 since 2018. Large language models are breaking the bank with their increased size. The report gives the example of Google’s PaLM model, released in 2022, noting that it cost 160 times more and was 360 times larger than OpenAI’s GPT-2 from 2019. Overall, the larger the model, the higher the training costs. The study estimates that Deepmind’s Chinchilla model and HuggingFace’s BLOOM respectively cost $2.1 and $2.3 million to train. On a global scale, private investment in AI has shown a 26.7% decrease from 2021-2022, and AI funding for startups has also slowed down. However, over the last decade, AI investment has increased considerably, as the report shows that compared to 2013, the amount of private investment in AI was 18 times greater in 2022. There was also a plateau in the number of companies with new AI adoption initiatives. Between 2017 and 2022, the proportion of companies adopting AI doubled, the report says, but there has been a recent leveling-off of about 50-60%. Another topic of interest is the growing governmental focus on AI. The AI Index analyzed the legislative records of 127 countries and found that 37 bills containing “artificial intelligence” were made law in 2022 versus only one in 2016. The U.S. government has upped its AI-related contract spending by 2.5x since 2017, the study found. The courts are also seeing a jump in AI-related legal cases: in 2022 there were 110 such cases related to civil, intellectual property, and contract law. The AI Index also dives into a Pew Research survey regarding Americans’ opinions about AI. In a survey of over 10,000 panelists, 45% reported feeling equally concerned and excited about AI’s use in daily life, and 37% said they felt more concerned than excited. Just 18% felt more excited than concerned. Of the main hesitations, 74% reported being very or somewhat concerned about AI being used to make important life decisions for humans, and 75% were uneasy about AI being used to know people’s thoughts and behaviors. For observers of AI’s propulsion into the cultural spotlight, there are many more fascinating topics discussed in the 2023 AI Index. To access the full report, visit this link.

The Stanford Institute for Human-Centered Artificial Intelligence (HAI) has released its 2023 Artificial Intelligence Index which analyzes AI’s impact and progress. The data-driven report is a deep dive into topics related to AI such as research, ethics, policy, public opinion, and economics.

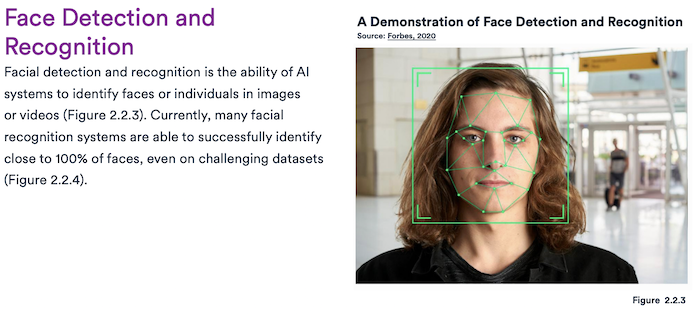

Key findings of the study include how AI research is expanding with topics including pattern recognition, machine learning, and computer vision. The report notes that the number of AI publications has more than doubled since 2010. Even so, the AI industry is advancing over academia, the report says, citing 32 significant machine learning models produced by industry over just 3 made by academics. The study attributes this to the vast resources needed for training these large models.

Traditional AI benchmarks like ImageNet, an image classification benchmark, and SQuAD, a reading comprehension test, are no longer sufficient for measuring progress, leading to new benchmarks like BIG-bench and HELM. Vanessa Parli, HAI associate director and AI Index steering committee member explained in a Stanford article that many AI benchmarks have reached a saturation point where little improvement has been made, and that researchers must develop new benchmarks based on how society wishes to interact with AI. She gives the example of ChatGPT and how it passes many benchmarks but still frequently gives incorrect information.

Ethical issues like bias and misinformation were another aspect of AI examined by the report. With the rise of popular generative AI models like DALL-E 2, Stable Diffusion, and of course, ChatGPT, ethical misuse of AI is increasing. The report notes that the number of AI incidents and controversies has increased 26 times since 2012, according to the AIAAIC database, an independent repository for data related to AI misuse. Additionally, interest in AI ethics is rapidly growing, as the study found that the number of submissions to the AI ethics conference FAccT has more than doubled since 2021 while increasing by a factor of 10 since 2018.

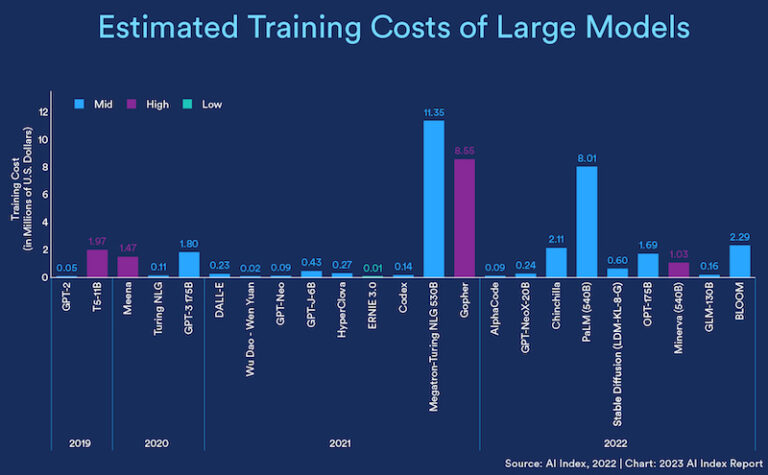

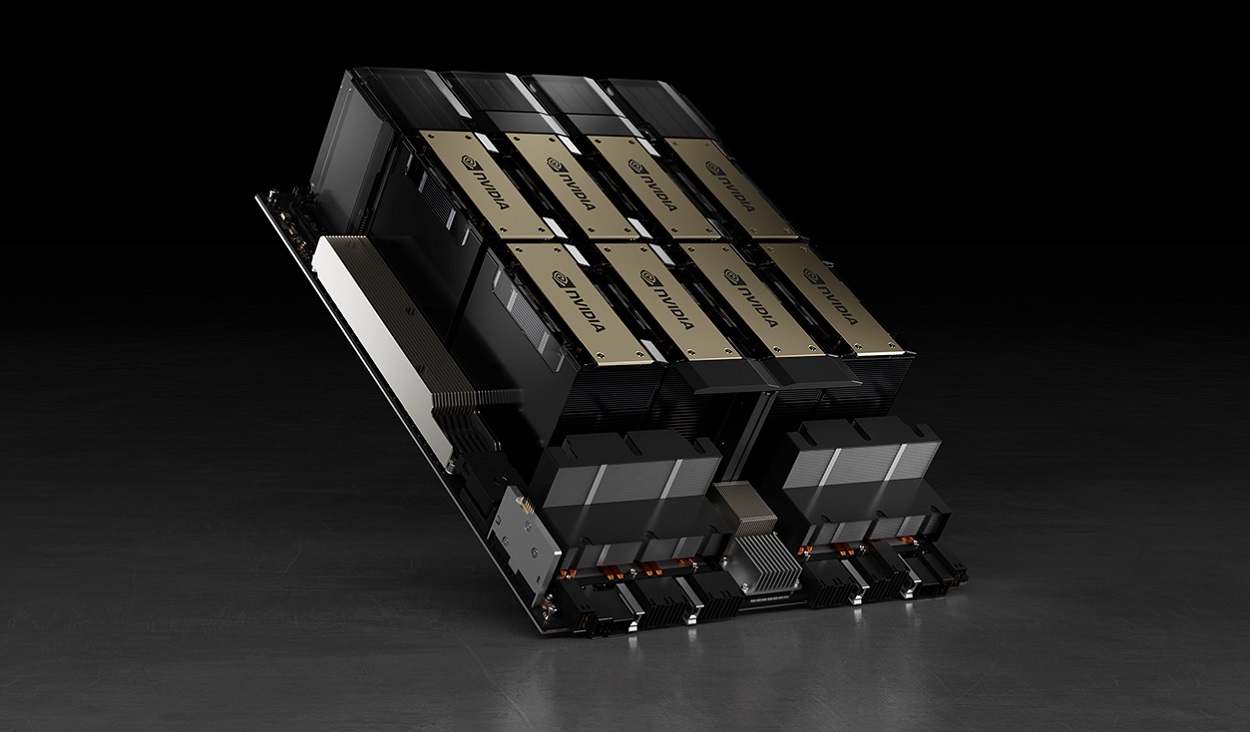

Large language models are breaking the bank with their increased size. The report gives the example of Google’s PaLM model, released in 2022, noting that it cost 160 times more and was 360 times larger than OpenAI’s GPT-2 from 2019. Overall, the larger the model, the higher the training costs. The study estimates that Deepmind’s Chinchilla model and HuggingFace’s BLOOM respectively cost $2.1 and $2.3 million to train.

On a global scale, private investment in AI has shown a 26.7% decrease from 2021-2022, and AI funding for startups has also slowed down. However, over the last decade, AI investment has increased considerably, as the report shows that compared to 2013, the amount of private investment in AI was 18 times greater in 2022. There was also a plateau in the number of companies with new AI adoption initiatives. Between 2017 and 2022, the proportion of companies adopting AI doubled, the report says, but there has been a recent leveling-off of about 50-60%.

Another topic of interest is the growing governmental focus on AI. The AI Index analyzed the legislative records of 127 countries and found that 37 bills containing “artificial intelligence” were made law in 2022 versus only one in 2016. The U.S. government has upped its AI-related contract spending by 2.5x since 2017, the study found. The courts are also seeing a jump in AI-related legal cases: in 2022 there were 110 such cases related to civil, intellectual property, and contract law.

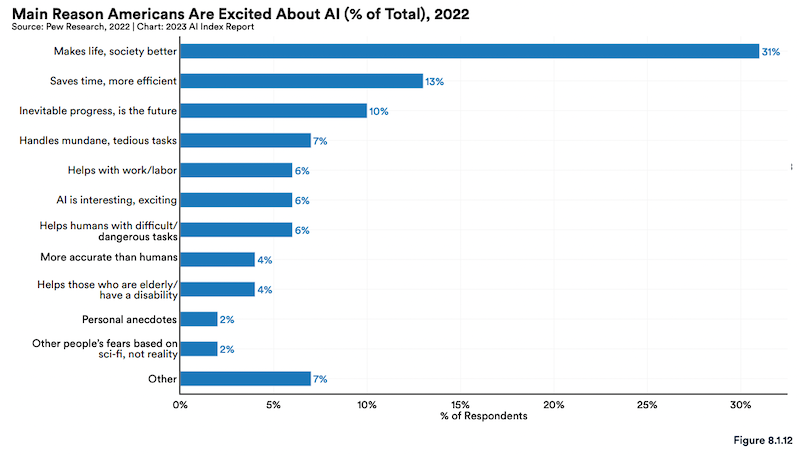

The AI Index also dives into a Pew Research survey regarding Americans’ opinions about AI. In a survey of over 10,000 panelists, 45% reported feeling equally concerned and excited about AI’s use in daily life, and 37% said they felt more concerned than excited. Just 18% felt more excited than concerned. Of the main hesitations, 74% reported being very or somewhat concerned about AI being used to make important life decisions for humans, and 75% were uneasy about AI being used to know people’s thoughts and behaviors.

For observers of AI’s propulsion into the cultural spotlight, there are many more fascinating topics discussed in the 2023 AI Index. To access the full report, visit this link.

What's Your Reaction?