Nvidia Tees Up New Platforms for Generative Inference Workloads like ChatGPT

Today at its GPU Technology Conference, Nvidia discussed four new platforms designed to accelerate AI applications. Three are targeted at inference workloads for generative AI applications, including generating text, images, and videos, and another is aimed boosting recommendation models, vector databases, and graph neural nets. Generative AI has surged in popularity since November, when OpenAI released ChatGPT to the world. Companies are now looking to use conversational AI systems (sometimes called chatbots) to service customer needs. That is great news for Nvidia, which makes the GPUs that are typically used to train large language models (LLMs) such as ChatGPT, GPT-4, BERT, or Google’s PaLM. But in addition to training LLMs and generative computer vision models such as OpenAI’s DALL-E, GPUs can also be used to accelerate the inference side of the AI workload. To that end, Nvidia today revealed new products designed to accelerate inference workloads. The first is the Nvidia H100 NVL, aimed at large language model deployment. Nvidia says this new H100 configuration is “ideal for deploying massive LLMs like ChatGPT at scale.” It sports 188GB of memory and features a “transformer engine” that the company claims can deliver delivers up to 12x faster inference performance for GPT-3 compared to the prior generation A100, at data center scale. The H100 NVL is composed of two previously announced H100 GPUs built on the PCIe form factor connected via an NVLink bridge, and “will supercharge” LLM inferencing, says Ian Buck, Nvidia’s vice president of hyperscale and HPC computing. “These two GPUs work as one to deploy large language models and GPT models from anywhere from 5 billion parameters all the way up to 200 [billion parameters],” Buck said during a press briefing Monday. “It has 188GB of memory and is 12x faster, this one GPU, than the throughput of an DGX A100 system that’s being used today everywhere. I’m really excited about the Nvidia H100 NVL. It’s going to help democratize the ChatGPT use cases and bring that capability to every server in every cloud.” The Santa Clara, California, company also highlighted the L40 GPU introduced last September. Based on the Ada Lovelace architecture, the GPU SKU is optimized for graphics and AI-enabled 2D, video, and 3D image generation. Compared to the previous generation chip, the L40 delivers 7x the inference performance for Stable Diffusion (an AI image generator) and 12x the performance for powering Omniverse workloads. Joining the lineup is the new L4 GPU, also based on the Ada Lovelace architecture, but in a smaller single-slot form factor. Targeting AI image work in addition to being a "univeral" workhorse, this GPU is able to deliver 120 times faster video inference than CPU servers, the company claims. Finally, the company talked up its Grace Hopper part as being ideal for graph recommendation models, vector databases, and graph neural nets. Sporting a 900 GB/s NVLink-C2C connection between CPU and GPU, the Grace Hopper “superchip” will be able to deliver 7X faster data transfers and queries compared to PCIe Gen 5, Nvidia says. “The Grace CPU and the Hopper GPU combined really excel at those very large memory AI tasks for inference, for workloads like large recommender systems, where they have huge embedding tables to help predict what customers need, want, and want to buy,” Buck says. “We see Grace Hopper superchip [bringing] amazing value in the areas of large recommender systems and vector databases.” All of these platforms ship with Nvidia software, such as its AI Enterprise suite. This suite includes Nvidia’s TensorRT software development kit (SDK) high-performance deep learning inference and the Triton Inference Server, which is an open-source inference-serving software that helps standardize model deployment. Some of Nvidia’s partners have already adopted some of these new products. Google Cloud, for instance, is using L4 in its Vertex AI cloud service. A company called Descript is using the L4 GPU in Google Cloud to power its generative AI service, which caters to video and podcast creators. Another startup called WOMBO is using L4 on Google Cloud to power its text-to-art generation service. A company called Kuaishou is also using L4 on Google Cloud to power its short video service. The L4 GPU is available as a private preview on Google Cloud as well as through 30 server makers, including ASUS, Dell Technologies, HPE, Lenovo, and Supermicro. The L40 is available from a select number of system builders, while Grace Hopper and H100 NVL equipped servers are expected to be available in the second half of the year. A version of this story first appeared on our sister site Datanami.

Today at its GPU Technology Conference, Nvidia discussed four new platforms designed to accelerate AI applications. Three are targeted at inference workloads for generative AI applications, including generating text, images, and videos, and another is aimed boosting recommendation models, vector databases, and graph neural nets.

Generative AI has surged in popularity since November, when OpenAI released ChatGPT to the world. Companies are now looking to use conversational AI systems (sometimes called chatbots) to service customer needs. That is great news for Nvidia, which makes the GPUs that are typically used to train large language models (LLMs) such as ChatGPT, GPT-4, BERT, or Google’s PaLM.

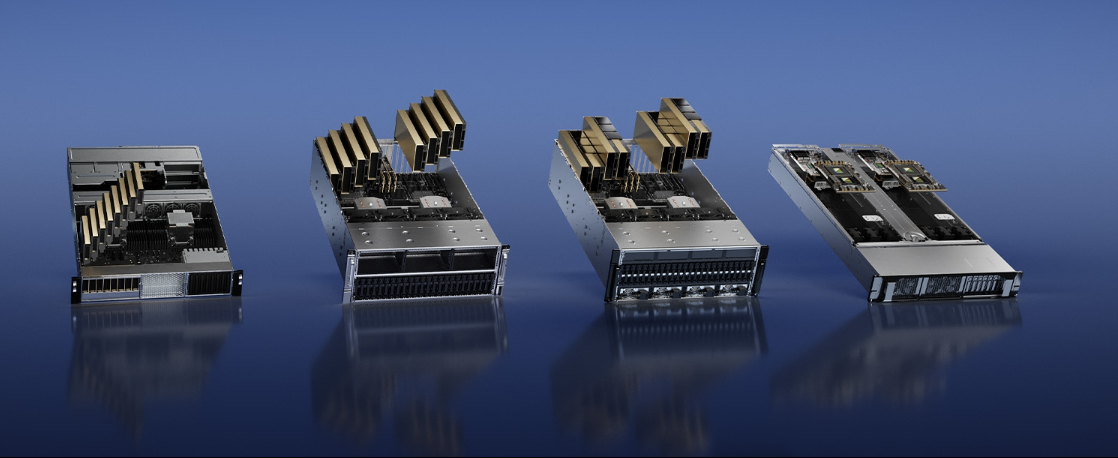

Nvidia previews four inference platforms. From left to right, they incorporate the L4 GPU, the L40 GPU, the H100 NVL and the Grace-Hopper.

But in addition to training LLMs and generative computer vision models such as OpenAI’s DALL-E, GPUs can also be used to accelerate the inference side of the AI workload. To that end, Nvidia today revealed new products designed to accelerate inference workloads.

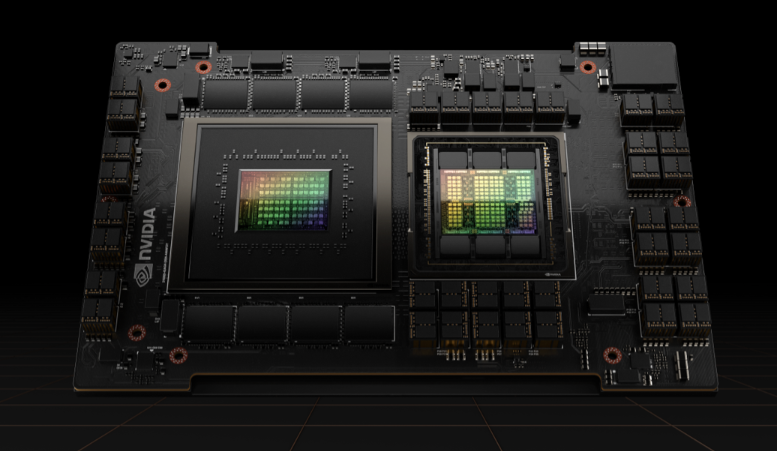

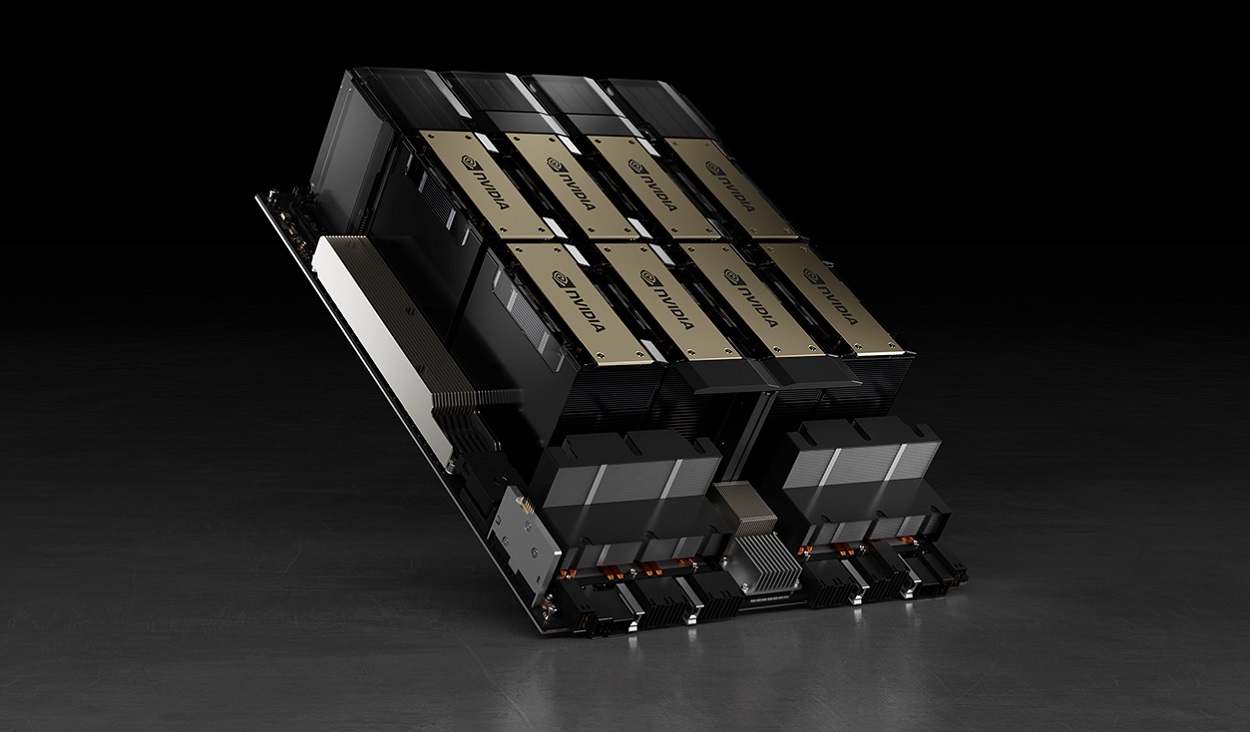

The first is the Nvidia H100 NVL, aimed at large language model deployment. Nvidia says this new H100 configuration is “ideal for deploying massive LLMs like ChatGPT at scale.” It sports 188GB of memory and features a “transformer engine” that the company claims can deliver delivers up to 12x faster inference performance for GPT-3 compared to the prior generation A100, at data center scale.

The H100 NVL is composed of two previously announced H100 GPUs built on the PCIe form factor connected via an NVLink bridge, and “will supercharge” LLM inferencing, says Ian Buck, Nvidia’s vice president of hyperscale and HPC computing.

The H100 NVL is composed of two previously announced H100 GPUs built on the PCIe form factor connected via an NVLink bridge, and “will supercharge” LLM inferencing, says Ian Buck, Nvidia’s vice president of hyperscale and HPC computing.

“These two GPUs work as one to deploy large language models and GPT models from anywhere from 5 billion parameters all the way up to 200 [billion parameters],” Buck said during a press briefing Monday. “It has 188GB of memory and is 12x faster, this one GPU, than the throughput of an DGX A100 system that’s being used today everywhere. I’m really excited about the Nvidia H100 NVL. It’s going to help democratize the ChatGPT use cases and bring that capability to every server in every cloud.”

The Santa Clara, California, company also highlighted the L40 GPU introduced last September. Based on the Ada Lovelace architecture, the GPU SKU is optimized for graphics and AI-enabled 2D, video, and 3D image generation. Compared to the previous generation chip, the L40 delivers 7x the inference performance for Stable Diffusion (an AI image generator) and 12x the performance for powering Omniverse workloads.

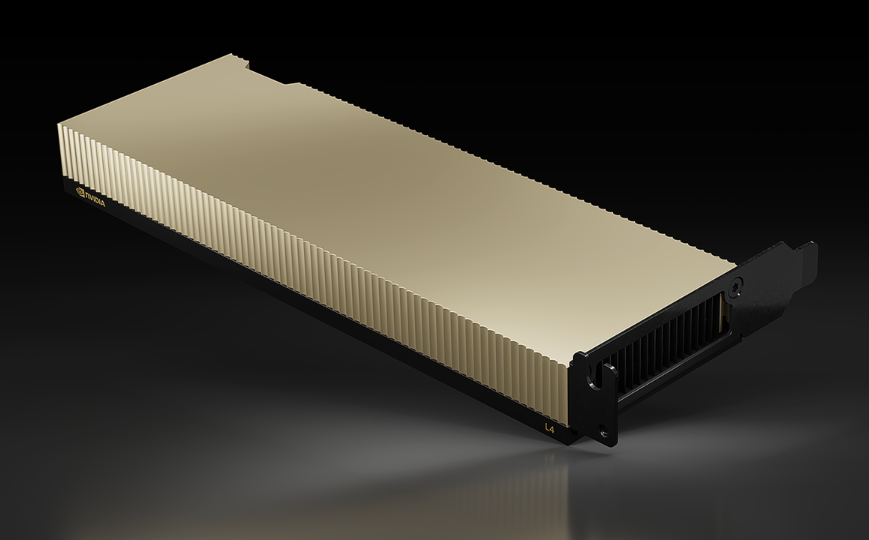

Joining the lineup is the new L4 GPU, also based on the Ada Lovelace architecture, but in a smaller single-slot form factor. Targeting AI image work in addition to being a "univeral" workhorse, this GPU is able to deliver 120 times faster video inference than CPU servers, the company claims.

Finally, the company talked up its Grace Hopper part as being ideal for graph recommendation models, vector databases, and graph neural nets. Sporting a 900 GB/s NVLink-C2C connection between CPU and GPU, the Grace Hopper “superchip” will be able to deliver 7X faster data transfers and queries compared to PCIe Gen 5, Nvidia says.

“The Grace CPU and the Hopper GPU combined really excel at those very large memory AI tasks for inference, for workloads like large recommender systems, where they have huge embedding tables to help predict what customers need, want, and want to buy,” Buck says. “We see Grace Hopper superchip [bringing] amazing value in the areas of large recommender systems and vector databases.”

All of these platforms ship with Nvidia software, such as its AI Enterprise suite. This suite includes Nvidia’s TensorRT software development kit (SDK) high-performance deep learning inference and the Triton Inference Server, which is an open-source inference-serving software that helps standardize model deployment.

Some of Nvidia’s partners have already adopted some of these new products. Google Cloud, for instance, is using L4 in its Vertex AI cloud service. A company called Descript is using the L4 GPU in Google Cloud to power its generative AI service, which caters to video and podcast creators. Another startup called WOMBO is using L4 on Google Cloud to power its text-to-art generation service. A company called Kuaishou is also using L4 on Google Cloud to power its short video service.

The L4 GPU is available as a private preview on Google Cloud as well as through 30 server makers, including ASUS, Dell Technologies, HPE, Lenovo, and Supermicro. The L40 is available from a select number of system builders, while Grace Hopper and H100 NVL equipped servers are expected to be available in the second half of the year.

A version of this story first appeared on our sister site Datanami.

What's Your Reaction?